The Apprentice Paradox: Why Tech Leaders Refuse to Replace Junior Developers

Tech leaders are resisting AI replacement of junior developers despite proven automation capabilities. The reason reveals a structural truth about how organisations actually produce knowledge—and why eliminating entry-level roles would destroy the pipeline that creates senior talent.

The Apprentice Paradox

Matt Garman, the chief executive of Amazon Web Services, does not often speak carelessly. When he called replacing junior developers with artificial intelligence “one of the dumbest things I’ve ever heard,” he was not merely defending entry-level workers. He was articulating a structural truth that many in Silicon Valley have begun to grasp: the factory floor cannot be automated without destroying the factory itself.

The statement landed in an industry already drunk on automation promises. GitHub Copilot and ChatGPT had demonstrated that AI could write boilerplate code, debug simple errors, and produce documentation faster than most humans. Studies showed productivity gains of 55 percent on routine tasks. Venture capitalists salivated. Chief financial officers did sums on napkins. If AI could do what juniors do, why pay juniors?

Yet the expected culling has not materialised. Major technology firms continue hiring entry-level engineers. Apprenticeship programmes expand rather than contract. Something in the logic of replacement does not survive contact with reality.

The answer lies not in sentimentality or labour politics, but in the architecture of how organisations actually produce knowledge. Junior developers are not merely cheap labour performing automatable tasks. They are the metabolic system through which expertise propagates, quality standards transmit, and institutional memory survives. Eliminate them, and you do not get a leaner organisation. You get a dying one.

The Pipeline Nobody Sees

The conventional case for replacement runs as follows. Junior developers cost money—typically $70,000 to $120,000 annually in major technology hubs. They require supervision that consumes senior engineers’ time. For the first two years, they are often net negative contributors, consuming more value in mentorship than they produce in code. AI tools now perform many of their traditional tasks at a fraction of the cost. The arithmetic seems obvious.

This analysis is not wrong. It is incomplete.

Consider what happens inside a technology company over a decade. The senior architects who design systems today were junior developers who wrote unit tests seven years ago. The engineering managers who make hiring decisions learned to evaluate talent by being evaluated themselves. The principal engineers who spot subtle design flaws developed that intuition through years of making and fixing mistakes. The pipeline is invisible until it breaks.

Biological systems offer an instructive parallel. Energy transfer between trophic levels loses 80 to 90 percent as metabolic heat—a thermodynamic constraint, not an inefficiency to be optimised away. Organisations attempting to flatten hierarchies or eliminate junior roles fight against an analogous structural reality. The apparent waste is actually the cost of knowledge transmission.

Medieval guilds understood this intuitively. Their monopoly power—forbidding non-members from practising trades—was not merely economic rent-seeking. It created the structural precondition for tacit knowledge transfer by forcing extended co-presence between masters and apprentices. The monopoly did not just protect profits. It protected the transmission mechanism itself.

When technology leaders resist eliminating junior roles, they are not being sentimental. They are recognising that their organisations’ future depends on a pipeline that takes years to build and months to destroy.

What AI Actually Does

The capabilities of AI coding tools are real but narrower than the hype suggests. The 2024 Stack Overflow Developer Survey found that 62 percent of developers now use AI tools, up from 44 percent the previous year. ChatGPT leads adoption at 82 percent usage among those who have tried AI assistance. The benefits are tangible: 81 percent report productivity improvements, and 71 percent of learners say AI accelerates their skill acquisition.

But the GitClear analysis of 153 million changed lines of code reveals the shadow side. Code churn—the rate at which recently written code must be rewritten—is projected to double in 2024 compared to the pre-AI baseline of 2021. Developers write code 55 percent faster. They also write code that requires more frequent correction.

The pattern mirrors what happens when you give students calculators before they understand arithmetic. The immediate task gets completed. The underlying competence does not develop. AI tools excel at generating syntactically correct code that passes basic tests. They struggle with the contextual judgment that distinguishes code that merely works from code that can be maintained, extended, and debugged by humans who did not write it.

Experienced developers recognise what their juniors call “code smells”—patterns that technically function but signal deeper problems. This recognition is not algorithmic. It emerges from years of encountering the downstream consequences of poor design choices. The ventromedial prefrontal cortex stores links between experiential knowledge and bioregulatory states to facilitate prediction in uncertain situations. Code smell detection operates identically: experienced developers store links between code patterns and the pain those patterns later caused.

AI cannot transmit this embodied knowledge because AI does not experience the pain. It generates code without consequences, feedback loops without closure, patterns without the suffering that makes patterns meaningful.

The Quality Ratchet

The degradation is not immediately visible. In fact, it initially appears as improvement.

When AI handles routine tasks, senior developers have more time for complex work. Code ships faster. Metrics improve. Quarterly reports glow. The problems accumulate in places that quarterly reports do not measure.

Technical debt compounds silently. Documentation grows stale because the juniors who would have written it while learning no longer exist. Institutional knowledge concentrates in fewer heads, each representing a larger single point of failure. The codebase becomes a palimpsest that only its original authors can read.

Medieval palimpsest recovery techniques use pseudocolour imaging to make both the original and overwritten texts simultaneously visible. AI refactoring tools operate on the opposite principle: they create a single “clean” output that erases the archaeological layers showing why code evolved. The history disappears. With it goes the understanding.

The Ifugao rice terraces of the Philippines have survived two thousand years not through engineering manuals but through ritual practices, chants, and symbols emphasising ecological balance. Junior developers serve an analogous function: they are the living documentation, the questions that force seniors to articulate what they know, the ritual practice that encodes why maintenance matters.

Remove the ritual. Watch the terraces crumble.

The Legal Minefield

Even organisations willing to accept quality degradation face a harder constraint: liability.

The United States Copyright Office has ruled that works require “sufficient human control” to qualify for copyright protection. AI-generated code exists in legal limbo. Who owns it? Who is liable when it fails? The answers remain unclear, and unclear answers terrify corporate counsel.

The European Union’s new Product Liability Directive creates presumption of defectiveness in complex AI systems. This is not a minor procedural adjustment. It inverts the burden of proof, requiring companies to demonstrate that AI-generated components did not cause harm rather than requiring plaintiffs to prove they did.

Companies are discovering a perverse incentive structure. Retaining junior developers provides legal insurance—human witnesses who can testify to the creative process, human judgment that anchors copyright claims, human accountability that satisfies regulators. The junior developer becomes less a productive worker than a liability shield.

Statistical software gained court admissibility under the Daubert standard through decades of transparent, reproducible outputs that experts could explain and defend. AI code generators produce opaque, non-deterministic outputs that cannot be reproduced or explained by the humans who deployed them. The evidentiary status remains contested. Prudent firms hedge their bets.

The Thermodynamics of Talent

The deeper issue is thermodynamic. Organisations, like ecosystems, require energy gradients to function.

In forest ecosystems, decomposer communities break down complex organic matter into usable nutrients. Diversity among decomposers enables processing of different material types. Eliminate diversity, and nutrient cycling collapses. The forest starves amid apparent plenty.

Junior developers function as organisational decomposers. They break down complex problems into reusable patterns. They translate senior expertise into documented procedures. They ask questions that reveal unstated assumptions. Their diversity of backgrounds—bootcamp graduates, computer science majors, career changers—enables processing of different problem types.

The economics of replacement ignore this function. A junior developer costs $100,000 annually. An AI coding tool costs perhaps $20 per user per month. The arithmetic favours automation. But the arithmetic measures only the visible transaction, not the invisible metabolism.

Sacrificial anodes protect ships’ hulls by corroding in place of the structural metal. They must have more negative electrochemical potential than the protected material—the protection mechanism requires inequality. Junior developers serve an analogous protective function. They absorb the routine errors, the tedious debugging, the documentation drudgery that would otherwise corrode senior engineers’ time and attention. The skill gap is not a problem to solve. It is the mechanism that makes protection possible.

The Apprenticeship Alternative

What, then, should organisations do?

The answer is not to reject AI tools. The productivity gains are real. The competitive pressure is genuine. Firms that refuse to adopt will fall behind firms that embrace augmentation intelligently.

The answer is to redesign the apprenticeship model for an AI-augmented world.

This means treating AI as scaffolding rather than replacement. Vygotsky’s zone of proximal development describes the space between what learners can do independently and what they can achieve with guidance. AI tools can maintain learners in this zone by handling tasks just beyond their current capability while leaving the learning-rich challenges intact.

The danger is that the same mechanism that makes AI effective as scaffolding—reducing cognitive load—becomes the pathway for skill atrophy. AI systems that successfully keep learners in their zone of proximal development by managing disorientation are simultaneously removing the productive struggle that builds expertise.

Organisations must be deliberate. Junior developers should use AI tools, but with structured reflection on what the tools produced and why. Code review should include not just “does this work” but “do you understand why this works.” Mentorship programmes should explicitly address the gap between generating code and comprehending systems.

This requires investment. Senior engineers must spend time teaching rather than shipping. Productivity metrics must account for knowledge transfer, not just feature delivery. The short-term costs are real.

The alternative is a slow-motion collapse of the talent pipeline, visible only when the senior engineers who remember how things work begin to retire and no one remains who learned from them.

The Quiet Calculation

Technology leaders pushing back against junior developer replacement are not being altruistic. They are being rational.

The Stack Overflow survey shows that developers learning to code adopt AI tools at higher rates than professionals—82 percent versus 70 percent. Juniors are, as Garman noted, “the most leaned into your AI tools.” They are not threatened by AI. They are the generation that will determine how AI integrates into software development practice.

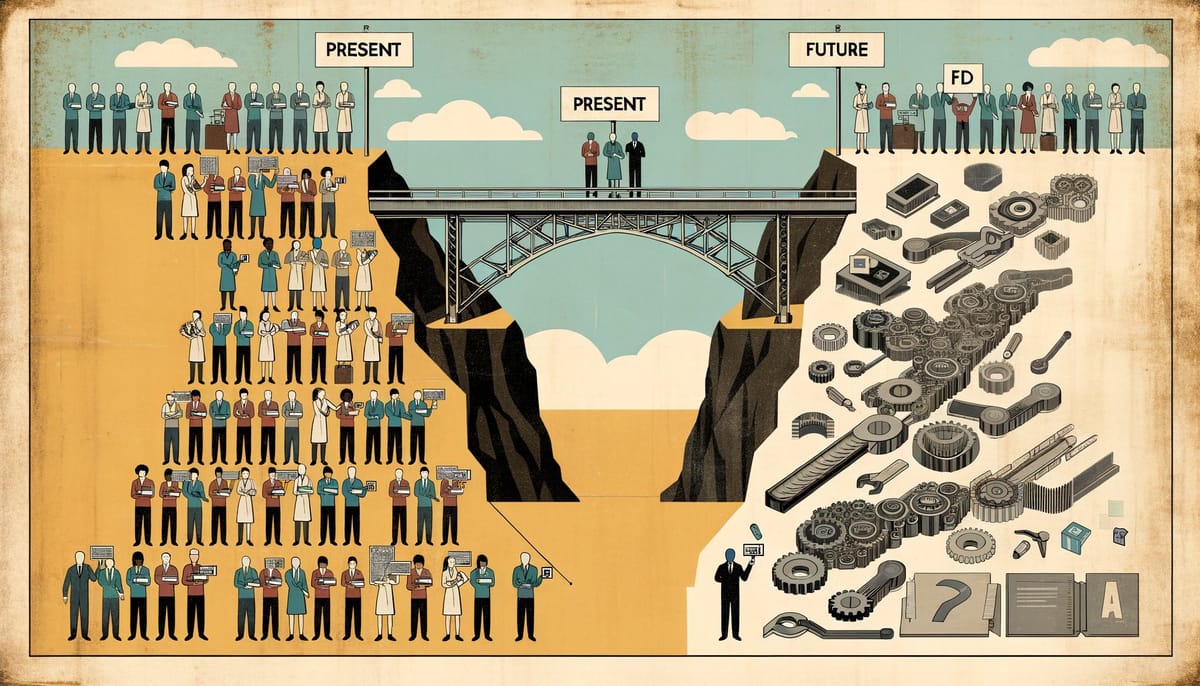

Eliminate them now, and you lose the bridge generation. You get senior engineers who remember the before-times and AI systems that never learned from human judgment. The gap becomes unbridgeable.

Cloud providers understand this particularly well. AWS Activate, Azure for Startups, and Google Cloud credits function as the modern equivalent of imperial tributary relationships—not merely financial subsidies but legitimisation tokens that signal a startup’s relationship with the platform empire. These programmes require a pipeline of developers who will build on the platforms. No juniors, no pipeline. No pipeline, no empire.

The resistance is not ideological. It is imperial self-interest dressed in humanitarian language.

The Fork Ahead

The technology industry faces a genuine choice, though it prefers not to acknowledge it.

Path one: aggressive automation. Replace junior developers with AI tools. Capture immediate cost savings. Accept quality degradation as acceptable overhead. Watch the talent pipeline atrophy over five to seven years. Discover, too late, that the senior engineers who could have trained replacements have retired or burned out. Import talent from regions that maintained apprenticeship models. Become dependent on external knowledge sources.

Path two: augmented apprenticeship. Retain junior developers but restructure their roles. Use AI for routine tasks while preserving learning-rich challenges. Invest in mentorship infrastructure. Accept lower short-term productivity for higher long-term capability. Build the workforce that will develop the next generation of AI tools rather than merely consume them.

Most firms will choose neither path deliberately. They will drift toward the first while publicly endorsing the second. They will cut junior headcount during downturns and struggle to rebuild during upswings. They will discover that talent pipelines, once broken, do not reassemble on demand.

The firms that thrive will be those that recognise what Garman articulated: the dumbest thing is not using AI. The dumbest thing is using AI to destroy the system that produces the humans who know how to use AI well.

Frequently Asked Questions

Q: Will AI eventually be able to fully replace junior developers? A: Current AI tools excel at generating code but cannot replicate the judgment, contextual understanding, and institutional knowledge that junior developers acquire through experience. The replacement question misframes the issue—the value of juniors lies not in the code they write but in the expertise they develop and eventually transmit.

Q: How are major tech companies actually using AI coding tools today? A: Most large technology firms deploy AI tools as augmentation rather than replacement. GitHub Copilot and similar tools handle boilerplate code, suggest completions, and assist with documentation, while human developers retain responsibility for architecture, code review, and system design. The 62 percent adoption rate reflects integration, not substitution.

Q: What skills should junior developers focus on to remain valuable? A: System-level thinking, code review capability, and the ability to understand why code works—not just that it works—will differentiate valuable juniors from those whose skills AI can replicate. Communication, mentorship readiness, and cross-functional collaboration also resist automation.

Q: Are bootcamp graduates more or less vulnerable to AI displacement than computer science graduates? A: Bootcamp graduates actually show higher employment rates than traditional computer science graduates in some studies (79 percent versus 68 percent), suggesting practical skills remain valued. However, both face pressure to demonstrate capabilities beyond what AI tools provide, shifting competition toward judgment and system understanding rather than syntax knowledge.

The Inheritance

The medieval monasteries that preserved classical knowledge through the Dark Ages did so not through superior technology but through institutional commitment to transmission. Monks copied manuscripts by hand not because copying was efficient but because copying was how knowledge survived.

Technology companies face an analogous choice. The efficient path—AI automation of junior roles—optimises for the present while mortgaging the future. The sustainable path—AI-augmented apprenticeship—accepts present costs for future capability.

The leaders pushing back against replacement understand something their spreadsheet-focused peers do not. Organisations are not machines to be optimised. They are living systems that reproduce through teaching. Eliminate the teaching function, and you do not get a more efficient machine. You get a machine that cannot build its own replacement parts.

The apprentice is not overhead. The apprentice is the point.